KB Article #181849

SYSLOG: Too many messages reported by Edge Agent, insufficient disk space

Problem

The Amplify Edge Agent is used for reporting and monitoring usage data for Axway cloud services and on-premise products that are used under subscription agreements, including SecureTransport. The Amplify Edge Agent collects usage events from connected products, aggregates the data and generates a usage report. The report is uploaded automatically to the Amplify Platform, or users can generate reports manually and upload to the platform.

If Syslog is used on the server where the Edge Agent is installed, an issue may arise where too many messages are reported to Syslog in /var/logs/syslog and occupy the available space on the root file system. When this happens, the following types of messages may be reported:

Kafka related

kg-ln27 013ba69bb2e8[1217]: message repeated 96 times: [ [2021-09-17 15:08:31,025] INFO [Transaction State Manager 2]: Finished loading 0 transaction metadata from __transaction_state-27 in 111552954 milliseconds (kafka.coordinator.transaction.TransactionStateManager)] kg-ln27 54a80215f2ff[1217]: [2021-09-17 15:08:31,100] INFO [Transaction State Manager 3]: Finished loading 0 transaction metadata from __transaction_state-1 in 24864733 milliseconds (kafka.coordinator.transaction.TransactionStateManager)

Flink related

kg-ln27 e6e926ba6800[1112]: 2021-09-23 18:22:00,087 [Window(TumblingEventTimeWindows(86400000), EventTimeTrigger, JsonSerializedTupleAggregationFunction, ProcessTimeWindowFunction) -> Map -> Sink: f6e9a14a-2815-462c-bffa-cbd44b5c36fe (1/1)] WARN org.apache.flink.metrics.MetricGroup - The operator name Window(TumblingEventTimeWindows(86400000), EventTimeTrigger, JsonSerializedTupleAggregationFunction, ProcessTimeWindowFunction) exceeded the 80 characters length limit and was truncated. kg-ln27 e6e926ba6800[1112]: 2021-09-23 18:22:00,103 [Window(TumblingEventTimeWindows(86400000), EventTimeTrigger, JsonSerializedTupleAggregationFunction, ProcessTimeWindowFunction) -> Map -> Sink: f6e9a14a-2815-462c-bffa-cbd44b5c36fe (1/1)] WARN org.apache.flink.streaming.api.functions.sink.TwoPhaseCommitSinkFunction - Transaction KafkaTransactionState [transactionalId=Window(TumblingEventTimeWindows(86400000), EventTimeTrigger, JsonSerializedTupleAggregationFunction, ProcessTimeWindowFunction) -> Map -> Sink: 29b8f567-5dc9-47a0-8b61-902f7458dc26-fdaaed869c7e767a4ba54f74c039d468-4, producerId=9058, epoch=17169] has been open for 3408533044 ms. This is close to or even exceeding the transaction timeout of 300000 ms.

Resolution

The resolution is to mute and/or to lower the logging level of the Kafka and Flink related log messages.

To fully apply the changes you will need to restart the docker containers afterwards.

Muting the Kafka related logs

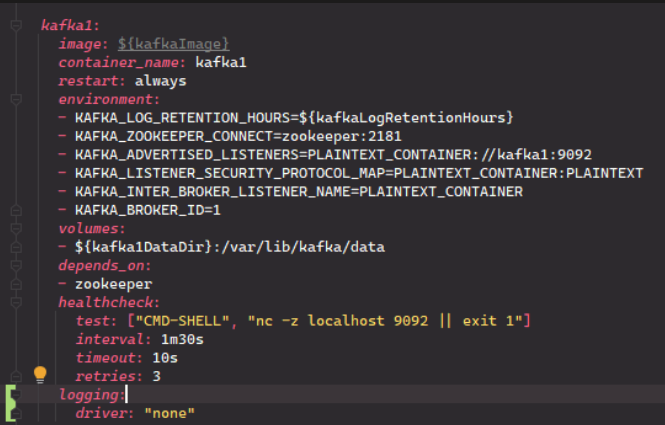

Edit the ./docker-compose.yml file and add this for each of the three Kafka containers (kafka1, 2, 3) and mind the indentation:

logging: driver: "none"

Example of how the Kafka config should look like

Muting the Flink related logs

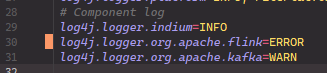

Edit the ./conf/agent/log4j.properties, at the very end of the file turn Flink logging level from WARN to ERROR:

log4j.logger.org.apache.flink=ERROR

Example of how the Flink config should look like